The latest signs that momentum is building in the geothermal space include military bases.

Trending Articles on HSE Now

Stay Connected

Don't miss out on the latest HSE topics delivered to your email every 2 weeks. Sign up for the HSE Now newsletter.

-

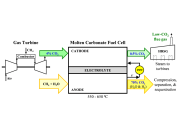

The US supermajor aims to speed the commercialization of a new liquid solvent that strips carbon dioxide from industrial flue gas.

-

SPE President Terry Palisch is joined by Paige McCown, SPE senior manager of communication and energy education, to discuss how members can improve the industry’s public image.

-

Failure to electrify facilities in the UK North Sea could soon result in a denial of petroleum licenses and the forced closure of some offshore assets.

-

Developing strong relationships within a leadership team is paramount for fostering collaboration, effective decision-making, and a positive organizational culture.

-

In the pursuit of eliminating serious injuries, illness, and fatalities, Shell has transitioned from its 12 life-saving rules to the nine established by the International Association of Oil and Gas Producers. This paper explains the context of the Life-Saving Rules and why Shell decided to transition, providing an overview of how it managed the change, the effects so …

-

The free virtual meeting plans to highlight state-of-the-art knowledge on improving safety culture and human/organizational performance in the oil field.

-

This article describes how leadership involvement in office and site visits can support and sustain an outstanding safety culture and outlines added benefits of conducting office and site visits.

-

The money from the Bipartisan Infrastructure Law will go to help Tribes plug orphaned oil and gas wells, combat climate change, and protect natural resources.

-

This paper describes development of technology with the capability to combust flare gases with a heating value up to 50% lower than existing flare-tip technology.

-

This paper describes a chemical-free process with a small footprint designed to capture exhaust from natural gas drive compressors and supporting gas-fueled production equipment.

-

The partnership seeks to shift the economics of carbon capture across high-emitting industrial sectors.