Initializing a reservoir simulator requires populating a 3D dynamic-grid-cell model with subsurface data and fit-for-purpose interrelational algorithms. In practice, these prerequisites rarely are satisfied fully. Implementation of four key points has enhanced the authentication of reservoir simulators through more-readily attainable history matches with less tuning required. This outcome is attributed to a more-systematic initialization process with a lower risk of artifacts. These benefits feed through to more-assured estimates of ultimate recovery and, hence, hydrocarbon reserves.

Introduction

Initialization is the process of rendering a subsurface-rock/fluid model into a representative starting point for reservoir simulation within the constraints introduced by imposing a 3D-grid array. The effectiveness of a hydrocarbon-reservoir simulator depends on the reliability of its initialization, which, in turn, is governed by the quality of the underpinning reservoir description. The classical view of initialization establishes the correct volumetric distributions of fluids within the grid cells that represent the subsurface reservoir when it is at initial conditions of an assumed hydrostatic equilibrium. Yet a functional reservoir must store fluids and allow them to flow. Therefore, an initialization process that is based on static data must render these data dynamically conditioned so that the ensuing dynamic model can have the greatest possible meaning. It is prerequisite that the reservoir description be realistic and representative and that its conditioning for simulation be carried out in a manner that retains reservoir character in a workable format. These requirements are rarely satisfied in practice. Shortcomings can be traced to ambiguous terminology, inconsistent definitions of reservoir properties, inappropriate parameter selection, incomplete data sampling, inconsistent scaling up, cross-scale application of interpretative algorithms, erroneous identification of net reservoir, unrepresentative fluid analyses, and incorrect application of software options.

The objective is to improve the synergy between static and dynamic reservoir models. The subject matter is derived from the authors’ studies of challenging field situations (i.e., from real solutions). The proposed refinements are directed at obviating the shortcomings stated previously. They are restricted to water-wet systems within which intergranular flow predominates.

Pivotal Role of Porosity

Analysis of core-calibrated well logs usually delivers total or effective porosity according to the adopted petrophysical model. Therefore, if total porosity is imported from a static model, as is common practice, knowledge of either the shale-volume fraction or the electrochemically-bound-water saturation is needed to convert total porosity to effective porosity. Porosity is used in dynamic simulation as part of a term in the fluid-flow equations, to estimate fluid volumes in place, to indicate rock type, or to indicate permeability.

Initialization Philosophy

The simulation exercise should focus on those volumes that contain net-reservoir rock. This means excluding those volumes of rock that do not contribute to reservoir functionality in terms of a significant capability to store fluids and allow them to flow. The rationale is that dynamic modeling of the behavior of rocks that do show net-reservoir character is difficult without including nonreservoir rock for which proper groundtruthing of model elements is unlikely. It is good practice to keep a dynamic model as simple as possible and to make it only as complex as is necessary for it to represent fluid storage and flow. Net-reservoir rock should be identified by cutoffs of shale volume, effective porosity, and free-fluid permeability, where the latter is determined independently of porosity. The cutoffs should be conditioned dynamically.

Beyond this, the philosophy has four key elements. The first key element concerns the representativeness of data from the standpoints of reservoir description (i.e., parametric averages) and the generation of interpretative algorithms.

The second key element is directed at synthesis of these data and their interrelationships for input into a simulation model. It is important to distinguish net reservoir with dynamically conditioned discriminators as a basis for identifying flow units. Reservoir properties should be averaged over the net reservoir at the grid-cell-height scale, and relational algorithms should be established at this same scale for seamless incorporation into the simulator.

The third key element calls for assured internal consistency of transitional and irreducible capillary-bound-water saturation, residual- and free-hydrocarbon saturation, and free-fluid permeability as a component of relative permeability. This consistency must be established at initial-pressure and -stress conditions and over the net-reservoir volumes. The process is intrinsically related to dynamically driven data partitioning, such as flow-unit classification.

The final element is concerned with the nonuniformity of net-reservoir rock and the directional dependence of net-reservoir properties and their interrelationships. Here, nonuniformity refers to spatial variations in those characteristics that control reservoir quality. Nonuniformity can be ordered geologically by gravity and sedimentation processes, as in the case of a layered sand/shale sequence, or it can be disordered, perhaps because of erosion, sediment reworking, or tectonic disturbance. Anisotropy refers to those properties that have different values in different directions. Anisotropy can have an indirect effect in which a scalar quantity is predicted from a directionally dependent measurement (e.g., the use of a collimated density-log measurement to evaluate porosity). A formation can be homogeneous and yet anisotropic. All these matters are affected by the scale of measurement. For example, heterogeneity and anisotropy might be important at the core-plug scale but have little effect at the grid-cell-height scale.

Data Representativeness

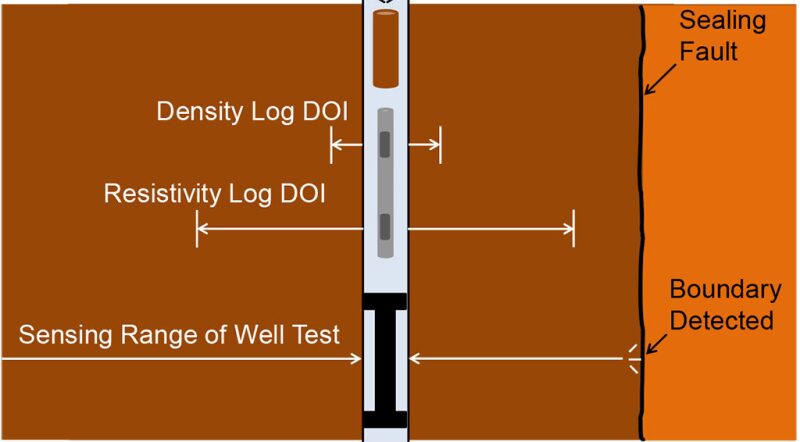

Most data used to initialize a simulation model are drawn from core analysis, core-calibrated log interpretation, well or formation tests, and production data where these are available. The key question is: Are there sufficient data to do the job? Here, the job is twofold. First, it concerns the meaningfulness of average parametric values over a predetermined interval or aggregate of intervals that have been penetrated by at least one well. The interval may relate to a facies object, an architectural layer, a petrophysical rock type, or a flow unit. Note that such classifications can coexist severally within a given reservoir system. Second, it concerns the accuracy and precision of predictive algorithms that are used to estimate a reservoir property from one or more data inputs. The latter may themselves be reservoir properties or they may be other parametric measurements that are known to be related to the target reservoir property. Predictive algorithms usually are set up and applied over net-reservoir intervals identified through application of dynamically conditioned cutoffs to core-calibrated downhole measurements.

Data Synthesis

Essentially, data synthesis refers to scaling up input data to the reservoir-simulator scale as determined by the adopted grid-cell height. The treatment is threefold. First, reservoir properties must be scaled up. Second, predictive algorithms that will be applied to net-reservoir volumes within a reservoir zone must be established at the larger scale. The treatment occurs in each of two stages—core to log and log to grid cell. Here, it is applied to porosity and permeability and to the prediction of the latter from the former, but the principle can be extended to other core and log measurements. The third aspect is concerned with the need to render the output data as dynamically conditioned. This last requirement applies to all scales of measurement.

Stage 1—Core to Log. Conventional core plugs usually are cut every 30 cm, with some geological license where the measured sampling point is close to a boundary between layers. It is recommended that in a key well, this sampling interval be reduced to 15 cm so that it matches the standard sampling interval of well logs. Otherwise, the assumption is made that each core plug is representative of a 30-cm layer within which it is centrally positioned. Where core inspection indicates that this assumption is invalid, some other method should be considered (e.g., whole-core measurements).

Stage 2—Log to Grid Cell. For reference, a grid-cell height of 1.5 m is adopted. This height is of the order of the grid-cell height adopted in many full-field simulators. The method detailed in the complete paper can be used for other purposes, such as scaling up to the well-test scale for purposes of comparing petrophysically derived and test-derived permeability estimates. Frequently, different geocellular models are constructed with different degrees of scaling up. Hydrocarbon-in-place volumes often are calculated on finely gridded models that are too detailed for simulation purposes, thus requiring further scaling up to a simulation-grid scale.

Stage 3—Dynamic Conditioning. After a static reservoir model has been prepared for dynamic simulation and initialization has been completed successfully, the model is tuned and history matched. This step involves adjusting a variety of parameters, including reservoir properties, so that the simulator estimates fluid-flow rates and pressures similar to historical observations. Complete historical-production data and properly interpreted pressure measurements are prerequisites for a reliable history match that has predictive capabilities. Dynamic data in the form of well production and pressure data often are considered to be independent of the construction of the static model and the initialization processes. Efforts to integrate production and static data are aimed at reducing the adverse consequences of what has become entrenched as a two-step process. The quality of a dynamic model can be improved if dynamic data are used to influence the model at the static construction phase, leading to the concept of reversible workflows.

Internal Consistency of Data

Static-model construction often is confined to analysis of geological data to define the structure and faults and to distribute reservoir properties (e.g., porosity, permeability, and net-/gross-reservoir ratio), which in turn are linked to geologically derived facies. Initializing the dynamic model involves integrating data such as fluid contacts, fluid pressure/volume/temperature (PVT) properties, capillary pressure profiles, and relative permeability curves. Many of these data elements are linked and should be analyzed together. For example, PVT tables of reservoir-fluid properties include fluid densities, which partially govern capillary pressure as a function of height above a mean free-water level, which is used to distribute the initial water saturation. Relative permeability curves, relative permeability endpoints, saturation endpoints including minimum and maximum saturations, minimum moveable saturations, and other properties are often different for different flow units or facies.

Many reservoir engineers regard the initialization process as one of calculating initial-fluid-saturation distributions, estimating initial fluid-in-place volumes, and comparing these with volumes obtained through static modeling. Discrepancies are reconciled when the reservoir model is debugged before running the simulator. While this paper advocates a broader perception of initialization, traditional checks that are carried out under a narrower definition of the term are vitally important. They include initial fluid saturations, equilibrium, saturation endpoints, relative permeability endpoints, relative permeability curves, sequence of permeability modeling, and sequence of assigning the net-/gross-reservoir ratio.

Heterogeneity and Anisotropy

In geoscience, heterogeneity often refers to variations in the composition and texture of rocks. For purposes of initialization, a broader interpretation must be adopted that takes into account variations in pore characteristics, reservoir fluids, and the wetting phase because all of these properties affect displacement efficiency and fluid storage and flow. These matters affect the previously described procedures at three levels: the representativeness of core data, log data, and grid-cell data.

Anisotropy. Because permeability is a tensor, it generally is anisotropic, with different magnitudes in different directions. The most significant anisotropy usually is in the contrast between permeability measured perpendicular to bedding planes (commonly called vertical permeability) and permeability measured parallel to bedding planes (horizontal permeability). Permeability is the only reservoir property to which anisotropic considerations apply directly; all other properties (i.e., porosity and net-/gross-reservoir ratio) are scalar quantities, although there may have been some directional dependence in their determination (e.g., collimated density measurements for porosity, dynamically conditioned cutoffs for net-/gross-reservoir ratio). Moreover, the gradient of a scalar property, a proxy for heterogeneity, is directionally dependent and, therefore, is subject to anisotropic considerations. For example, the porosity variability in a vertical sense is different from the porosity variability in a horizontal sense. Only fluid properties (i.e., viscosity and density) appear directly as gradient terms in the fluid-flow equations. Porosity and net-/gross-reservoir ratio are not gradient terms, a fact that greatly facilitates the scaling up of these properties, the objective of which should be to conserve volume.

Anisotropy may also exist within the horizontal plane itself (e.g., where sedimentary deposition has taken place under the influence of unidirectional forces, such as for highly channelized systems). However, this type of anisotropy usually is less pronounced than the contrast between vertical and horizontal permeability and it often is ignored for simulation purposes. Furthermore, horizontal anisotropy is seldom quantified through core analysis. Anisotropy is most easily dealt with by orienting the grid directions parallel to principal axes of the permeability tensor, obviating the need to deal with subdiagonal terms in simulation algorithms. Where horizontal anisotropy exists, the principal directions usually are controlled geologically and they can be accommodated by use of a geologically influenced grid orientation.

Vertical-/horizontal-permeability contrasts can have a significant effect on actual and predicted reservoir performance. At the core-plug scale, anisotropy manifests itself through flow paths of different tortuosity, resulting from sedimentological phenomena such as grain orientation and fine clay-mineral layers. On a larger scale, shale bands affect anisotropy. Here, the vertical-/horizontal-permeability ratio depends greatly on the degree of scaling up. Permeability anisotropy must be quantified both at the grid-cell-thickness level, for input directly into the fluid-flow equations, and at a scale much finer than that of the grid cell, to enable generating appropriate pseudorelative permeability curves, which are affected by permeability anisotropy.

Workflows for Initialization

The workflows selected for initializing a reservoir-model/-simulation study are influenced strongly by the purpose of the study. Complex workflows that include detailed stratigraphic and facies constraints do not necessarily provide better results than simple workflows that focus on functionality. The emphasis should be on correct representation of reliable data rather than on complexity. The authors emphasize the value that can be added to any procedure that involves processing data by ensuring that the data are quality checked and by using them in conjunction with complementary data sets from independent sources. The workflow leading up to initialization involves large volumes of data covering diverse disciplines. The authors focused on a subset comprising those key areas. It is believed that following the correct sequence and integrating diverse data sets appropriately can improve the initialized model. Workflows for conventional and special core analysis, log analysis, scaling up, and dynamic conditioning are presented in Tables 2 through 6 in the complete paper.

This article, written by Senior Technology Editor Dennis Denney, contains highlights of paper SPE 160248, “Optimizing the Value of Reservoir Simulation Through Quality-Assured Initialization,” by Paul F. Worthington, SPE, Gaffney, Cline & Associates, and Shane K.F. Hattingh, SPE, ERC Equipoise, prepared for the 2012 SPE Asia Pacific Oil and Gas Conference and Exhibition, Perth, Australia, 22–24 October. The paper has not been peer reviewed.